Executive Summary

NVIDIA is aggressively positioning its technology to power "AI Factories," a redefined concept of data centers that shifts from retrieval-based computing to generative-based computing. These AI Factories are designed to generate "tokens"—the fundamental building blocks of AI—at unprecedented scale and efficiency. This strategic pivot by NVIDIA aims to capitalize on the burgeoning demand for AI by providing a full-stack solution encompassing chips, systems, and software. Key themes include the economic principles driving AI adoption (Jevons paradox), the emphasis on extreme efficiency and performance (tokens per second per watt), and the broad application of AI across various industries, from healthcare and automotive to robotics and telecommunications. NVIDIA's roadmap, including Blackwell, Rubin, and Rubin Ultra architectures, showcases a relentless pursuit of exponential performance gains and scale-up capabilities, underpinned by innovations in GPU design, networking, and co-packaged optics.

I. The "AI Factory" Concept and Its Economic Drivers

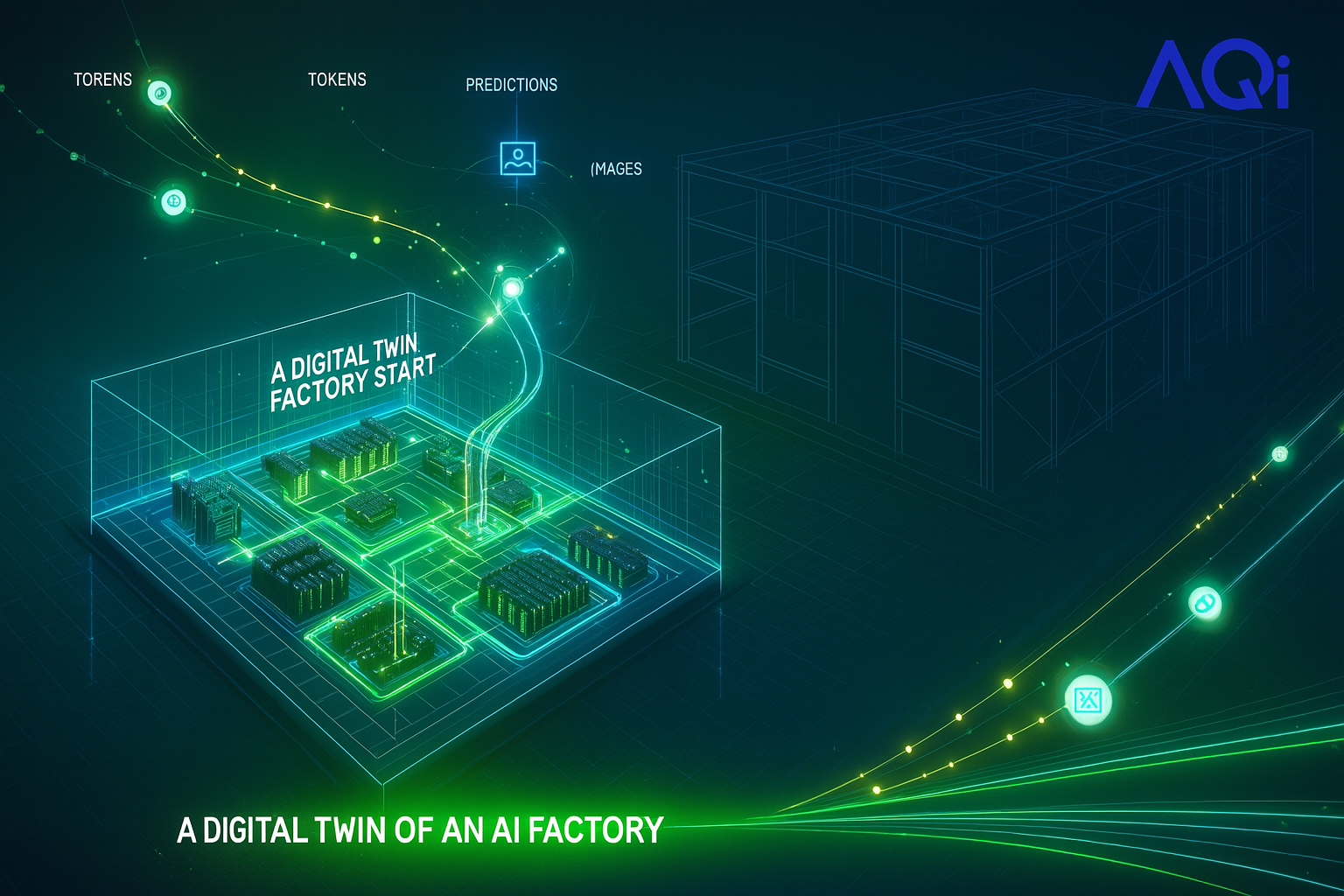

The central theme is NVIDIA's redefinition of data centers as "AI Factories."

- Generative vs. Retrieval Computing: "Traditional data centers are designed to retrieve pre-written software and execute it deterministically. AI factories are built to generate software on the fly. The logic is not fixed but emergent." Jensen Huang states, "In the past, we wrote the software and ran it on computers. In the future, the computer is going to generate the tokens for the software." This signifies a fundamental shift in computing paradigm.

- Tokens as the New Unit of Productivity: The output of AI Factories is "tokens – the atomic units of prediction, reasoning, and generation that power AI systems." The new metric for productivity is "tokens per second per watt," emphasizing both speed and energy efficiency.

- Jevons Paradox and the Flywheel Effect: NVIDIA's strategy is built on Jevons paradox: "when a resource becomes cheaper and more efficient, we consume more of it." As inference costs fall due to more efficient AI Factories, previously uneconomical AI applications become viable, leading to increased demand for tokens, which in turn drives demand for more compute and, consequently, more NVIDIA hardware. Jensen Huang summarizes this as: "The more you save, the more you make."

- Revenue Generation: AI Factories are directly linked to revenue potential. They "convert the most valuable digital commodity — data — into revenue potential." The efficiency and performance of these factories "directly affects your quality of service, your revenues, and your profitability."

II. Core Technologies and Architectures Powering AI Factories

NVIDIA's AI Factory vision is underpinned by a comprehensive suite of hardware and software.

- DGX Platform: This is NVIDIA's purpose-built system for AI. It includes "NVIDIA DGX SuperPOD to train and customize AI models" and is central to enterprises building their own AI factories, as seen with Lockheed Martin.

- GPU Architectures (Blackwell, Hopper, Rubin):Hopper: Described as "the fastest computer in the world," which "revolutionized artificial intelligence."

- Blackwell: Represents a significant leap in performance over Hopper. "Blackwell is way, way better than Hopper," offering "25x in one generation as ISO power" and "40 times the performance of Hopper" for reasoning models. It features "two Blackwell GPUs in one Blackwell package."

- Rubin: The next-generation architecture, scheduled for 2026, with "Rubin Ultra" following in 2027. Rubin includes a new CPU ("twice the performance of Grace") and GPU, "brand new networking smart NIC (CX9), NVLink 6, brand new HBM-4 memories." Rubin promises "900x scale up flops" compared to Hopper (1x) and Blackwell (68x).

- NVLink and Scale-Up: NVIDIA emphasizes "scale up" before "scale out." NVLink is crucial for this, enabling "every GPU to talk to every GPU at exactly the same time at full bandwidth." The "Grace Blackwell MV-Link 72 rack" is a prime example, achieving "a one Exaflops computer in one rack" with "570 terabytes per second" memory bandwidth. The future Rubin Ultra will feature "NVLink 576 Extreme Scale-Up" with "15 exaflops" per rack.

- Software and Ecosystem (CUDA, AI Enterprise, etc.):CUDA: The foundational platform, with "6 million developers in over 200 countries" and "over 900 CUDAx libraries and AI models." It's described as "literally everywhere" – in every cloud and data center.

- AI Blueprints/Microservices: Includes specific solutions like "AI Inference - Dynamo," "AI Inference Microservices - NIM," and "AI Microservices - CUDA-X."

- Dynamo: A "real-time orchestration layer that acts like the AI factory’s “operating system” – dynamically allocating GPU resources between prefill and decode." It significantly extends the performance of Blackwell.

- NeMo: For "Build, customize, and deploy multimodal generative AI."

- Omniverse Cloud: For "Integrate advanced simulation and AI into complex 3D workflows."

- Morpheus: For Cybersecurity.

- Metropolis: For Intelligent Video Analytics.

- Clara: For Healthcare.

- Isaac: For Robotics.

- cuOpt: For Decision Optimization.

- RAPIDS/Apache Spark: For Data Science.

- CUDA-X Libraries: Specific libraries like CuPi Numeric (for NumPy acceleration), CuLitho (computational lithography), Aerial (5G radio), cuOpt (optimization), CUDSS (sparse solvers for CAE/EDA), CU-DF (data frames for Spark/Pandas), and Warp (physics library). These libraries accelerate specific scientific and industrial fields.

- Networking Innovation (Silicon Photonics): NVIDIA is investing heavily in optical networking to overcome power and cost limitations in scaling to millions of GPUs. They are announcing "NVIDIA's first co-packaged option, silicon photonic system," which is "the world's first 1.6 terabit per second CPO," to save "tens of megawatts" of power in data centers.

III. Applications and Industry Impact

AI Factories are designed to impact a vast array of industries and applications.

- Generative AI and Reasoning: The focus is on generating "thinking tokens" for complex problem-solving. A clear example demonstrates how a "reasoning model" can solve a complex wedding table seating problem, taking "almost 9,000 tokens" compared to a "one-shot" non-reasoning model that was "wrong."

- Physical AI and Robotics: "AI that understands the physical world. It understands things like friction and inertia, cause and effect, object permanence." This enables robotics, with NVIDIA introducing "Isaac Groot N1," a "generalist foundation model for humanoid robots," built on synthetic data generation and simulation. The "Newton" project, a partnership with DeepMind and Disney Research, provides an "incredible physics engine" for training robots with "tactile feedback, rigid body, soft body simulation, super real-time."

- Digital Twins and Simulation: Blackwell's speed gains are "closing the gap between simulation and real-time digital twins." Omniverse and Cosmos are key platforms for this, enabling "AVs to learn, adapt, and drive intelligently."

- Enterprise IT Transformation: "Our entire industry is going to get supercharged as we move to accelerated computing." NVIDIA is partnering with major storage vendors to offer "GPU accelerated" storage systems. Dell, for example, will offer "a whole line of NVIDIA products Enterprise IT AI infrastructure systems and all the software that runs on top of it."

- Broad Industry Reach: The GTC conference itself spans "Healthcare... Transportation. Retail. Gosh, the computer industry. Everybody in the computer industry is here." Specific solutions listed include: Automotive (DRIVE), Cloud-AI Video Streaming (Maxine), Healthcare (Clara), Industrial AI (Omniverse), Telecommunications (Aerial), and Smart Cities.

IV. Challenges and Competition

While NVIDIA presents a strong "bull case," counterpoints and competitive factors are acknowledged.

- Elasticity of Demand: The "bear case" questions "whether demand is elastic enough to justify NVIDIA’s growth projections." Concerns include enterprises pulling back on AI spending, slow infrastructure deployment, or "marginal utility flatten[ing] as AI saturates certain use cases."

- Commoditization of Tokens/Models: "Open-source models, from Alibaba to DeepSeek, are rapidly improving and driving token prices down." If "tokens become commodities, and “good-enough” models can run efficiently without large clusters of high-end GPUs, the economics of token generation at scale could erode faster than NVIDIA expects."

- Competition in AI OS/Infrastructure: While NVIDIA has Dynamo, "this kind of system-level control is not exclusive to NVIDIA. Hyperscalers like Microsoft and Google are building tightly integrated software stacks for their custom chips. AMD is a major player, and startups like Cerebras and Groq are pursuing alternative architectures." Open-source projects like vLLM also contribute to a "more open and flexible future."

- Hardware Competition: "AMD’s MI300X already matches or beats the H100 on some inference workloads, and its roadmap is moving fast. Meanwhile, startups like Cerebras are approaching the same challenges with different – and, for many use cases, more performant and efficient – architectures."

- Investor Sentiment: NVIDIA's stock "dipped around 4% after Jensen’s keynote," indicating investor scrutiny on the sustainability of AI spending growth.

V. Future Outlook and Roadmap

NVIDIA's roadmap outlines a rapid pace of innovation.

- Annual Product Releases: NVIDIA commits to "a new product line every single year, X factors up," with architectures refreshed every two years.

- Continuous Scale-Up: The roadmap includes "Blackwell Ultra later this year, Rubin in 2026, and Rubin Ultra after that," all designed for a "hyper-accelerated token economy."

- Commitment to Safety and Ethics: Particularly in automotive AI, NVIDIA highlights its extensive work on safety, with "seven million lines of code safety assessed" and "over a thousand patents filed" to ensure "diversity, transparency and explainability" in AI systems.

- Accessibility and Broad Deployment: NVIDIA aims to make its AI Factory technology available across various form factors, from "DGX Station" (a "PC" for data scientists and researchers) to enterprise servers and supercomputers, through partnerships with OEMs (HP, Dell, Lenovo, Asus).

In conclusion, NVIDIA is making a bold and comprehensive bet on the "AI Factory" as the future of computing infrastructure. By redefining data centers as generative engines of intelligence, and continually pushing the boundaries of performance and efficiency across its full technology stack, NVIDIA aims to remain the backbone of the rapidly expanding AI economy. However, the success of this vision will also depend on broader market adoption, competitive dynamics, and the evolving economics of AI.